Pyramid Position Encoding Generator

this porject is supported by Jordan University of science and Technology , Multi-instance Learning approach based on Transformer

Coronaries Arteries Diseases Weakly supervised Learning based method

this porject is supported by Jordan University of science and Technology , alonge side with this research we explored a paper that introduced Multi-instance Learning approach based on Transformer in our mission we Re-design PPGE method for more efficient training by improvig Convolution with Fast Fourier Transform which called the method Fast Fourier Postional encoding FFPE

Notation: the implementation still under progres as long as we are trying to collect dataset of Coronaries-Arteries-Diseases now tried to test the approach on Data from Kaggle RSNA Screening Mammography Breast Cancer Detection

Introduction

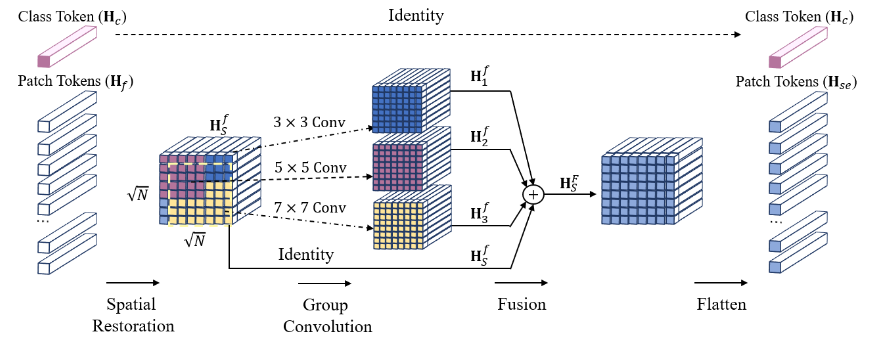

Multiple instance learning (MIL) is a powerful tool to solve the weakly supervised classification in whole slide image (WSI) based pathology diagnosis. However, the current MIL methods are usually based on independent and identical distribution hypothesis, thus neglect the correlation among different instances. To address this problem, we proposed a new framework, called correlated MIL, and provided a proof for convergence. Based on this framework, we devised a Transformer based MIL (TransMIL) used within Fast Fourire to Enhanced Pyramid Position Encoding Generator Projection Linear System ,

- Setup the ENV:

-

Create the environment

conda create --name TransFFT-MIL python=3.6 -

install the requirements

pip install -r requirements.txt

-

- Run the code :

- training model </br> Note in our experiment we Re-Developed two approaches based Positional Encodings methods FFTPEG and FF_ATPEG that can be changed in TransFFPEG.py file

python train.py --stage 'train' --gpus 0 --Epochs 200

The Full code in Github: (read more about the Prohect Github